Advanced 📚

Advanced 📚

Local Server

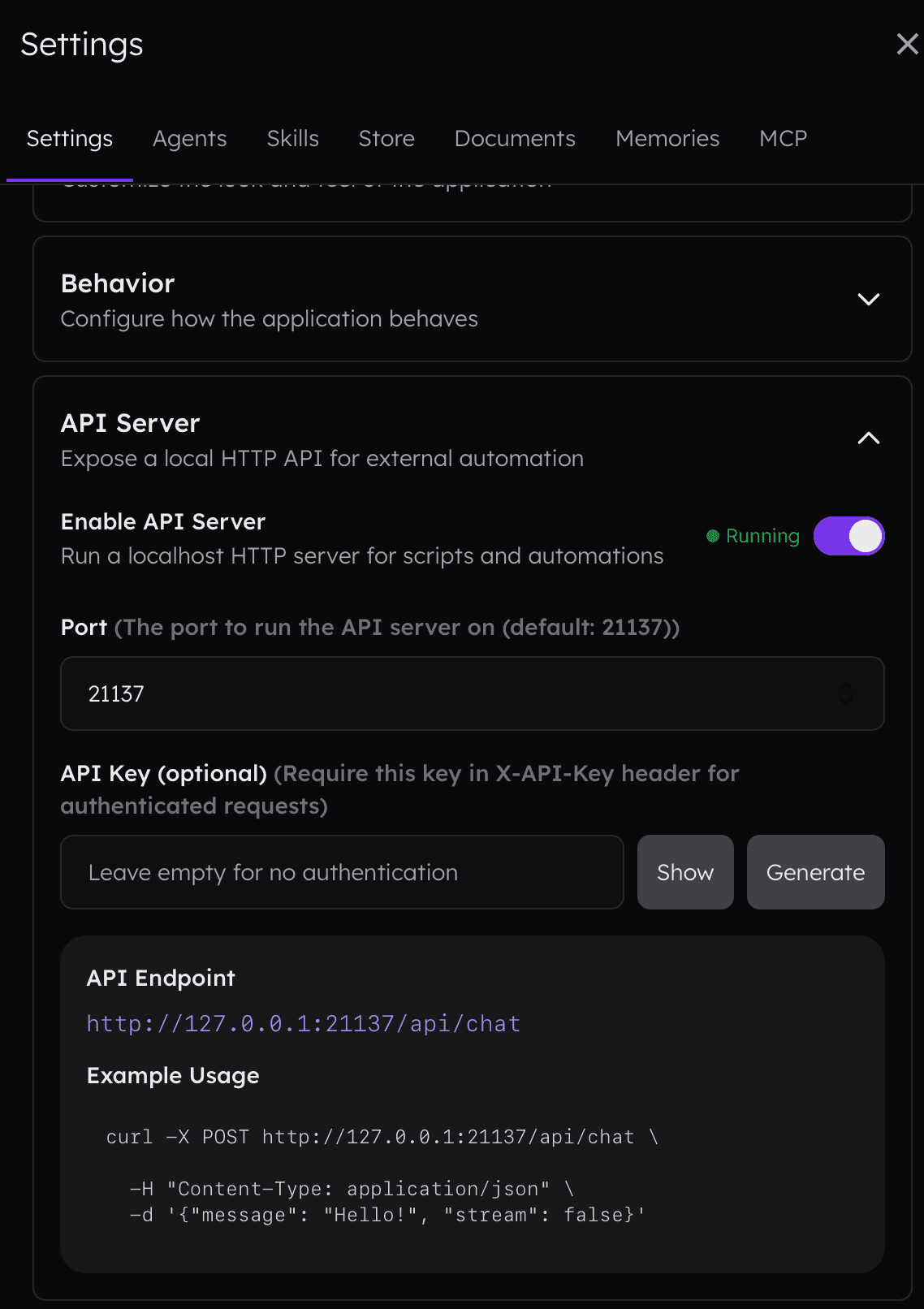

Alice can expose a local server that allows you to interact with the app using scripts and automations.

A localhost HTTP API for external automation, scripts, and integrations with Alice's agent system. To enable HTTP API, go to the settings:

Quick Start

Enable the server: Settings → API Server → Enable

Default port: `21137`

Base URL: `http://127.0.0.1:21137`

# Simple chat request curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -d '{"message": "Hello!", "stream": false}'

Authentication

Authentication is optional. If an API key is configured in Settings, include it in requests:

API Key `X-API-Key: your-api-key`

Bearer Token `Authorization: Bearer your-api-key`

Public endpoints (no auth required):

- `GET /api/health`

- `GET /api/status`

Protected endpoints (auth required if API key is set):

- All `/api/chat`, `/api/threads`, `/api/agents`, `/api/snippets` routes

Endpoints

Health Check

GET /api/health

Response:

{ "ok": true }

Server Status

GET /api/status

Response:

{ "status": "running", "version": "1.0.0", "activeThreads": 2 }

Chat (Main Endpoint)

POST /api/chat

Send a message and receive a response. Supports both streaming (SSE) and non-streaming modes.

Request Body

Field | Type | Default | Description |

|---|---|---|---|

message | string | null | The message/prompt to send |

stream | boolean | true | Stream response via SSE |

thread_id | string | null | Continue existing conversation (creates new if omitted) |

agent_id | string | null | Agent UUID or name to use |

snippet_id | string | null | Snippet/skill UUID to activate |

model | string | null | Model override (e.g., `"anthropic:claude-sonnet-4-20250514"`) |

temperature | number | null | Temperature (0.0 - 2.0) |

max_tokens | number | null | Maximum output tokens |

Field aliases (all accepted):

- `thread_id` / `threadId` / `conversation_id` / `conversationId`

- `agent_id` / `agentId` / `assistant_id` / `assistantId`

- `snippet_id` / `snippetId`

- `max_tokens` / `maxTokens`

Non-Streaming Response

curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -d '{ "message": "What is 2+2?", "stream": false }'

Response:

{ "threadId": "550e8400-e29b-41d4-a716-446655440000", "messageId": "6ba7b810-9dad-11d1-80b4-00c04fd430c8", "content": "2 + 2 = 4", "status": "completed", "toolCalls": null, "usage": { "inputTokens": 12, "outputTokens": 8 } }

Streaming Response (SSE)

curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -H "Accept: text/event-stream" \ -d '{ "message": "Tell me a joke", "stream": true }'

SSE Events:

Event type | Description | Payload |

|---|---|---|

start | Stream started | { threadId, messageId } |

thread_info | Thread ID | { threadId } |

text | Text Chunk | { delta: "..." } |

tool_call_start | Tool execution started | { id, name, arguments? } |

tool_call_result | Tool execution completed | { id, name, result, isError? } |

thinking_start | Thinking started | {} |

thinking_delta | Thinking content | { delta: "..." } |

thinking_end | Thinking completed | |

complete | Stream finished | { usage? } |

error | Error occured | { message, code? } |

Example SSE stream:

data: {"type":"start","threadId":"temp-123","messageId":"msg-456"} data: {"type":"thread_info","threadId":"550e8400-e29b-41d4-a716-446655440000"} data: {"type":"text","delta":"Why did the"} data: {"type":"text","delta":" chicken cross"} data: {"type":"text","delta":" the road?"} data: {"type":"complete","usage":{"inputTokens":10,"outputTokens":25}}

Continue Thread

POST /api/threads/:thread_id/send

Send a message to an existing conversation thread.

curl -X POST http://127.0.0.1:21137/api/threads/550e8400-e29b-41d4-a716-446655440000/send \ -H "Content-Type: application/json" \ -d '{"message": "Follow up question", "stream": false}'

Get thread

GET /api/threads/:thread_id

Retrieve thread details.

List agents

GET /api/agents

Response:

{ "agents": [ { "uuid": "alice-default", "name": "Alice", "description": "Default assistant", "isActive": true } ], "activeId": "alice-default" }

Get Agent

GET /api/agents/:id

Retrieve agent details by UUID.

List Snippets

GET /api/snippets

Reponse:

{ "snippets": [ { "uuid": "snippet-123", "name": "Code Review", "description": "Review code for issues", "category": "development", "icon": "code" } ] }

Get Snippet

GET /api/snippets/:id

Retrieve snippet details by UUID.

Error Responses

All errors return JSON with this structure:

{ "error": "Error description", "code": "ERROR_CODE" }

Notes

- Server binds to `127.0.0.1` only (localhost) — not accessible from other machines

- CORS is enabled for all origins (development-friendly)

- Keep-alive interval for SSE: 15 seconds

- The API uses Alice's existing chat logic and agent system

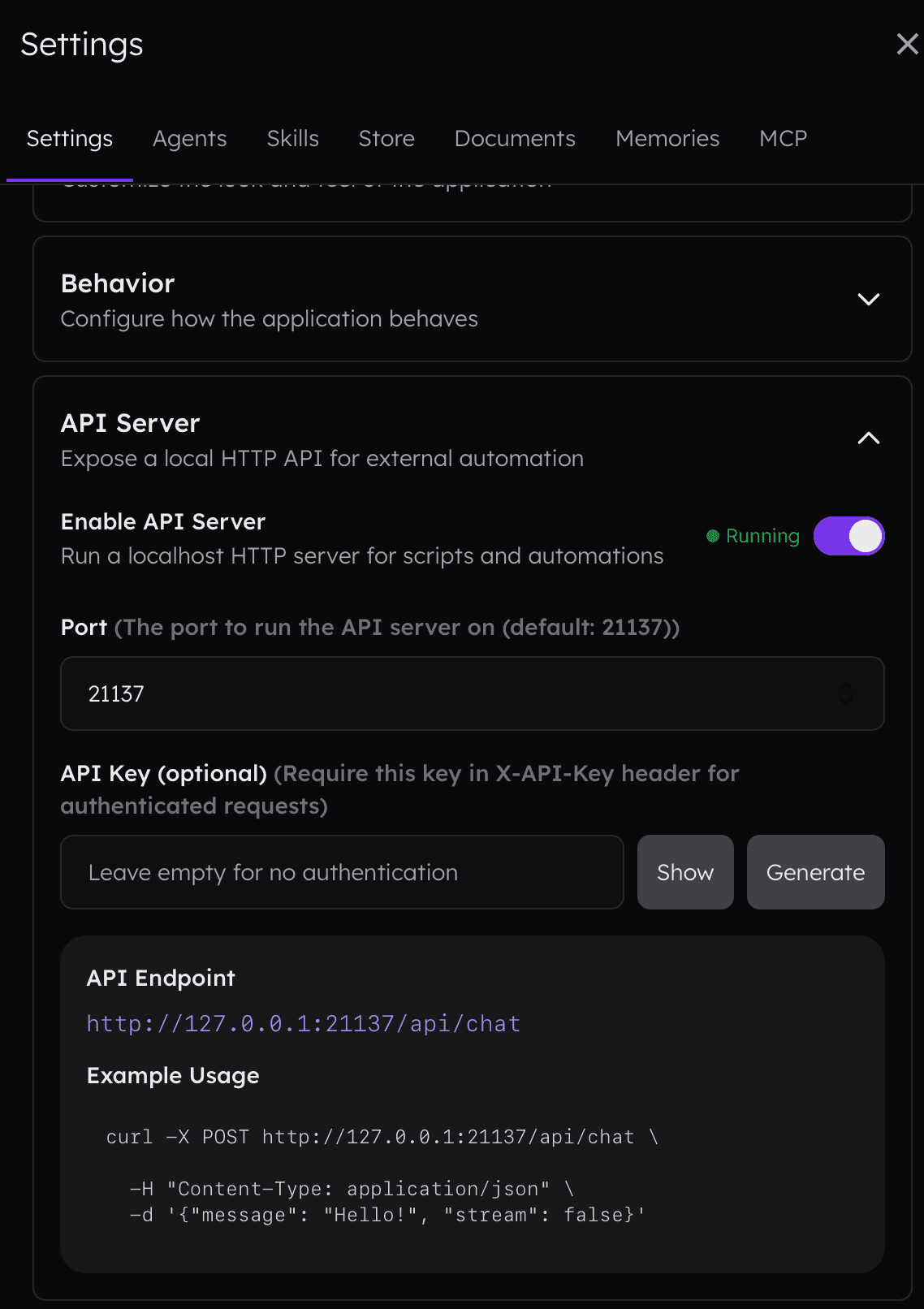

A localhost HTTP API for external automation, scripts, and integrations with Alice's agent system. To enable HTTP API, go to the settings:

Quick Start

Enable the server: Settings → API Server → Enable

Default port: `21137`

Base URL: `http://127.0.0.1:21137`

# Simple chat request curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -d '{"message": "Hello!", "stream": false}'

Authentication

Authentication is optional. If an API key is configured in Settings, include it in requests:

API Key `X-API-Key: your-api-key`

Bearer Token `Authorization: Bearer your-api-key`

Public endpoints (no auth required):

- `GET /api/health`

- `GET /api/status`

Protected endpoints (auth required if API key is set):

- All `/api/chat`, `/api/threads`, `/api/agents`, `/api/snippets` routes

Endpoints

Health Check

GET /api/health

Response:

{ "ok": true }

Server Status

GET /api/status

Response:

{ "status": "running", "version": "1.0.0", "activeThreads": 2 }

Chat (Main Endpoint)

POST /api/chat

Send a message and receive a response. Supports both streaming (SSE) and non-streaming modes.

Request Body

Field | Type | Default | Description |

|---|---|---|---|

message | string | null | The message/prompt to send |

stream | boolean | true | Stream response via SSE |

thread_id | string | null | Continue existing conversation (creates new if omitted) |

agent_id | string | null | Agent UUID or name to use |

snippet_id | string | null | Snippet/skill UUID to activate |

model | string | null | Model override (e.g., `"anthropic:claude-sonnet-4-20250514"`) |

temperature | number | null | Temperature (0.0 - 2.0) |

max_tokens | number | null | Maximum output tokens |

Field aliases (all accepted):

- `thread_id` / `threadId` / `conversation_id` / `conversationId`

- `agent_id` / `agentId` / `assistant_id` / `assistantId`

- `snippet_id` / `snippetId`

- `max_tokens` / `maxTokens`

Non-Streaming Response

curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -d '{ "message": "What is 2+2?", "stream": false }'

Response:

{ "threadId": "550e8400-e29b-41d4-a716-446655440000", "messageId": "6ba7b810-9dad-11d1-80b4-00c04fd430c8", "content": "2 + 2 = 4", "status": "completed", "toolCalls": null, "usage": { "inputTokens": 12, "outputTokens": 8 } }

Streaming Response (SSE)

curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -H "Accept: text/event-stream" \ -d '{ "message": "Tell me a joke", "stream": true }'

SSE Events:

Event type | Description | Payload |

|---|---|---|

start | Stream started | { threadId, messageId } |

thread_info | Thread ID | { threadId } |

text | Text Chunk | { delta: "..." } |

tool_call_start | Tool execution started | { id, name, arguments? } |

tool_call_result | Tool execution completed | { id, name, result, isError? } |

thinking_start | Thinking started | {} |

thinking_delta | Thinking content | { delta: "..." } |

thinking_end | Thinking completed | |

complete | Stream finished | { usage? } |

error | Error occured | { message, code? } |

Example SSE stream:

data: {"type":"start","threadId":"temp-123","messageId":"msg-456"} data: {"type":"thread_info","threadId":"550e8400-e29b-41d4-a716-446655440000"} data: {"type":"text","delta":"Why did the"} data: {"type":"text","delta":" chicken cross"} data: {"type":"text","delta":" the road?"} data: {"type":"complete","usage":{"inputTokens":10,"outputTokens":25}}

Continue Thread

POST /api/threads/:thread_id/send

Send a message to an existing conversation thread.

curl -X POST http://127.0.0.1:21137/api/threads/550e8400-e29b-41d4-a716-446655440000/send \ -H "Content-Type: application/json" \ -d '{"message": "Follow up question", "stream": false}'

Get thread

GET /api/threads/:thread_id

Retrieve thread details.

List agents

GET /api/agents

Response:

{ "agents": [ { "uuid": "alice-default", "name": "Alice", "description": "Default assistant", "isActive": true } ], "activeId": "alice-default" }

Get Agent

GET /api/agents/:id

Retrieve agent details by UUID.

List Snippets

GET /api/snippets

Reponse:

{ "snippets": [ { "uuid": "snippet-123", "name": "Code Review", "description": "Review code for issues", "category": "development", "icon": "code" } ] }

Get Snippet

GET /api/snippets/:id

Retrieve snippet details by UUID.

Error Responses

All errors return JSON with this structure:

{ "error": "Error description", "code": "ERROR_CODE" }

Notes

- Server binds to `127.0.0.1` only (localhost) — not accessible from other machines

- CORS is enabled for all origins (development-friendly)

- Keep-alive interval for SSE: 15 seconds

- The API uses Alice's existing chat logic and agent system

Advanced 📚

Local Server

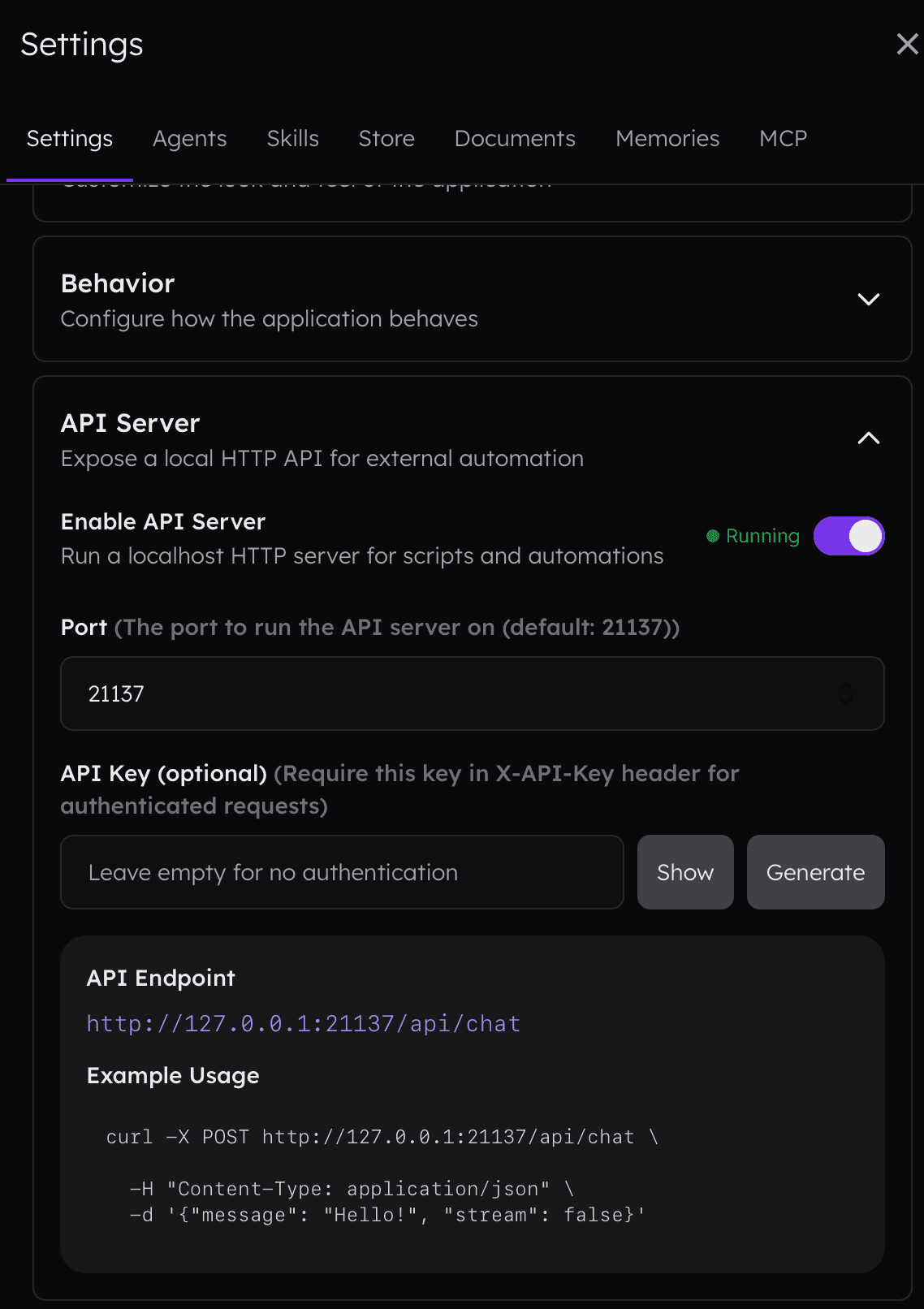

Alice can expose a local server that allows you to interact with the app using scripts and automations.

A localhost HTTP API for external automation, scripts, and integrations with Alice's agent system. To enable HTTP API, go to the settings:

Quick Start

Enable the server: Settings → API Server → Enable

Default port: `21137`

Base URL: `http://127.0.0.1:21137`

# Simple chat request curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -d '{"message": "Hello!", "stream": false}'

Authentication

Authentication is optional. If an API key is configured in Settings, include it in requests:

API Key `X-API-Key: your-api-key`

Bearer Token `Authorization: Bearer your-api-key`

Public endpoints (no auth required):

- `GET /api/health`

- `GET /api/status`

Protected endpoints (auth required if API key is set):

- All `/api/chat`, `/api/threads`, `/api/agents`, `/api/snippets` routes

Endpoints

Health Check

GET /api/health

Response:

{ "ok": true }

Server Status

GET /api/status

Response:

{ "status": "running", "version": "1.0.0", "activeThreads": 2 }

Chat (Main Endpoint)

POST /api/chat

Send a message and receive a response. Supports both streaming (SSE) and non-streaming modes.

Request Body

Field | Type | Default | Description |

|---|---|---|---|

message | string | null | The message/prompt to send |

stream | boolean | true | Stream response via SSE |

thread_id | string | null | Continue existing conversation (creates new if omitted) |

agent_id | string | null | Agent UUID or name to use |

snippet_id | string | null | Snippet/skill UUID to activate |

model | string | null | Model override (e.g., `"anthropic:claude-sonnet-4-20250514"`) |

temperature | number | null | Temperature (0.0 - 2.0) |

max_tokens | number | null | Maximum output tokens |

Field aliases (all accepted):

- `thread_id` / `threadId` / `conversation_id` / `conversationId`

- `agent_id` / `agentId` / `assistant_id` / `assistantId`

- `snippet_id` / `snippetId`

- `max_tokens` / `maxTokens`

Non-Streaming Response

curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -d '{ "message": "What is 2+2?", "stream": false }'

Response:

{ "threadId": "550e8400-e29b-41d4-a716-446655440000", "messageId": "6ba7b810-9dad-11d1-80b4-00c04fd430c8", "content": "2 + 2 = 4", "status": "completed", "toolCalls": null, "usage": { "inputTokens": 12, "outputTokens": 8 } }

Streaming Response (SSE)

curl -X POST http://127.0.0.1:21137/api/chat \ -H "Content-Type: application/json" \ -H "Accept: text/event-stream" \ -d '{ "message": "Tell me a joke", "stream": true }'

SSE Events:

Event type | Description | Payload |

|---|---|---|

start | Stream started | { threadId, messageId } |

thread_info | Thread ID | { threadId } |

text | Text Chunk | { delta: "..." } |

tool_call_start | Tool execution started | { id, name, arguments? } |

tool_call_result | Tool execution completed | { id, name, result, isError? } |

thinking_start | Thinking started | {} |

thinking_delta | Thinking content | { delta: "..." } |

thinking_end | Thinking completed | |

complete | Stream finished | { usage? } |

error | Error occured | { message, code? } |

Example SSE stream:

data: {"type":"start","threadId":"temp-123","messageId":"msg-456"} data: {"type":"thread_info","threadId":"550e8400-e29b-41d4-a716-446655440000"} data: {"type":"text","delta":"Why did the"} data: {"type":"text","delta":" chicken cross"} data: {"type":"text","delta":" the road?"} data: {"type":"complete","usage":{"inputTokens":10,"outputTokens":25}}

Continue Thread

POST /api/threads/:thread_id/send

Send a message to an existing conversation thread.

curl -X POST http://127.0.0.1:21137/api/threads/550e8400-e29b-41d4-a716-446655440000/send \ -H "Content-Type: application/json" \ -d '{"message": "Follow up question", "stream": false}'

Get thread

GET /api/threads/:thread_id

Retrieve thread details.

List agents

GET /api/agents

Response:

{ "agents": [ { "uuid": "alice-default", "name": "Alice", "description": "Default assistant", "isActive": true } ], "activeId": "alice-default" }

Get Agent

GET /api/agents/:id

Retrieve agent details by UUID.

List Snippets

GET /api/snippets

Reponse:

{ "snippets": [ { "uuid": "snippet-123", "name": "Code Review", "description": "Review code for issues", "category": "development", "icon": "code" } ] }

Get Snippet

GET /api/snippets/:id

Retrieve snippet details by UUID.

Error Responses

All errors return JSON with this structure:

{ "error": "Error description", "code": "ERROR_CODE" }

Notes

- Server binds to `127.0.0.1` only (localhost) — not accessible from other machines

- CORS is enabled for all origins (development-friendly)

- Keep-alive interval for SSE: 15 seconds

- The API uses Alice's existing chat logic and agent system